Re-evaluation on MultiPaxos under partial network partition from the OmniPaxos paper

Published:

TL;DR: OmniPaxos proposes some changes in MultiPaxos to deal with partial network partitions, where classic MultiPaxos leads to deadlocks, according to the authors. However, we show that it is not the case for a properly implemented MultiPaxos. Even without the complicated optimization in OmniPaxos, MultiPaxos can easily elect a new stable leader under partial network partitions.

The OmniPaxos paper from EuroSys’23 proposes a new approach to deal with network partitions issues in Replicated State Machines (RSMs). The partial network partition refers to a scenario where two servers are unreachable from each other but connected by a third server. The authors argue that, under partial network connectivity, traditional RSM systems (like MultiPaxos, Raft, VR) may succumb to degraded performance and complete loss of liveness due to deadlocks or livelocks.

What is partial network partition?

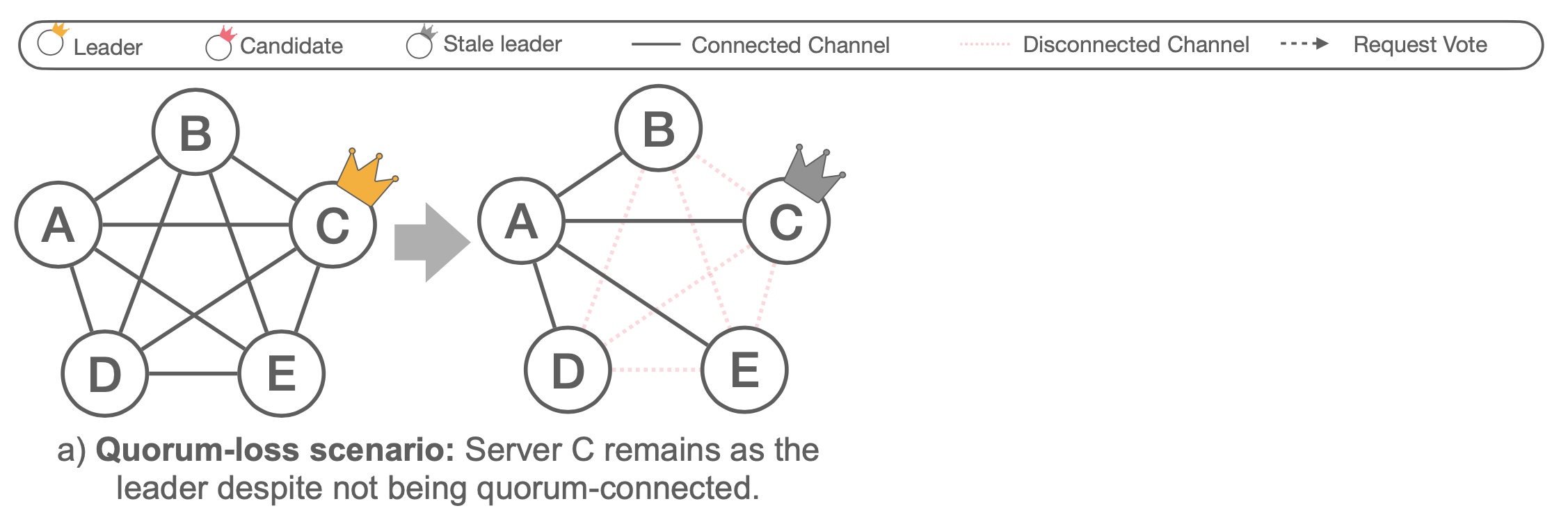

This paper identifies and focuses on three types of partial network connectivity that can lead to livelocks in existing RSM systems. According to the paper, MultiPaxos can handle the constrained election scenario but failed to proceed under the quorum-loss scenario. However, our implementation shows that MultiPaxos can handle both cases. So, we will explain what the quorum-loss scenario is, which is shown in the graph borrowed from the paper.

Quorum-loss scenario. Given an initial cluster of five fully-connected servers, where Server C is the stable leader, the quorum-loss scenario may arise when all servers are connected to Server A only and disconnected from the rest. In this case, Server B, D, and E cannot become the leader because they only receive votes from Server A and itself at most, which is less than the majority requirement, while Server A will not start the leader election because it can still receive heartbeats from the existing leader C. The authors argue that MultiPaxos cannot make any progress because no leader will be elected (However, we will show that MultiPaxos can handle this scenario as well in the later section).

How OmniPaxos deals with it?

This paper proposes a solution by introducing a concept called quorum-connected servers for leader election and decoupling leader election and log replication. A quorum-connected server maintains connections to at least the majority of servers.

All servers exchange the information of which servers are quorum-connected periodically. When the leader election happens, servers only vote for the candidate with the highest ballot that is quorum-connected. Additionally, OmniPaxos separates the modules of leader election, log replication, and reconfiguration, which eliminates the requirement of having the max log for the leader election. With these two main changes, OmniPaxos ensures a stable leader can always be elected under the three partial network partition scenarios above.

Can MultiPaxos survive under the quorum-loss scenario?

Despite the claim from the paper, we disagree with the claim and evaluation results in the paper that MultiPaxos leads to a deadlock in the case of the quorum-loss scenario. We followed the classic MultiPaxos protocol and implemented it in C++. Here is the link to our repo. We reproduced the quorum-loss scenario multiple times, and MultiPaxos can always easily elect a new stable leader.

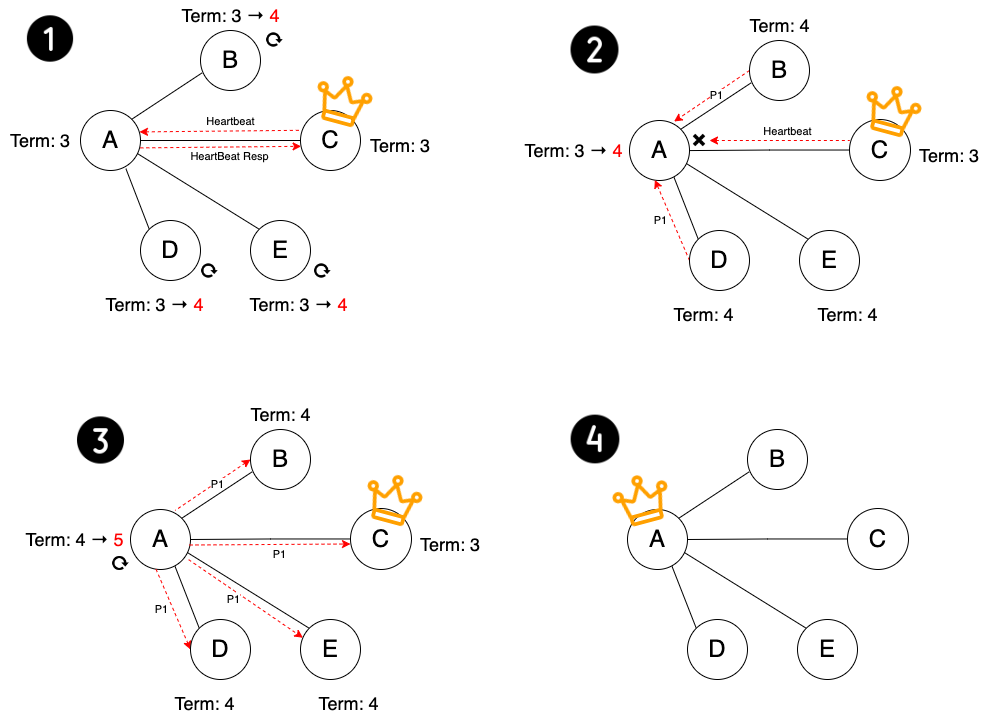

In our MultiPaxos implementation, any peer that receives majority votes in the prepare phase becomes a leader. The leader sends out heartbeat messages periodically to broadcast its leadership. If a follower does not receive heartbeats before the timeout, the peer will start the leader election. Additionally, the heartbeat message also piggybacks information of commit and log compaction. As shown in the figure, there are several steps as follows.

- When this quorum-loss scenario happens, all servers, except for Server A, lose the connection with C.

- Then Server B, D, or E will trigger the leader election with a higher ballot and send prepare (P1) requests to A. Because of the higher ballot, Server A will give a promise to one of them and update its own ballot. Consequently, Server A will no longer respond to the heartbeat from the old leader C.

- In the meantime, Server B, D, and E cannot become a new leader, and A will not receive any heartbeats from the new leader, resulting in a timeout and another round of leader election initialized by A.

- A will be the new leader, and the system will return to normal and make progress.

Reevaluation on MultiPaxos under the quorum-loss scenario

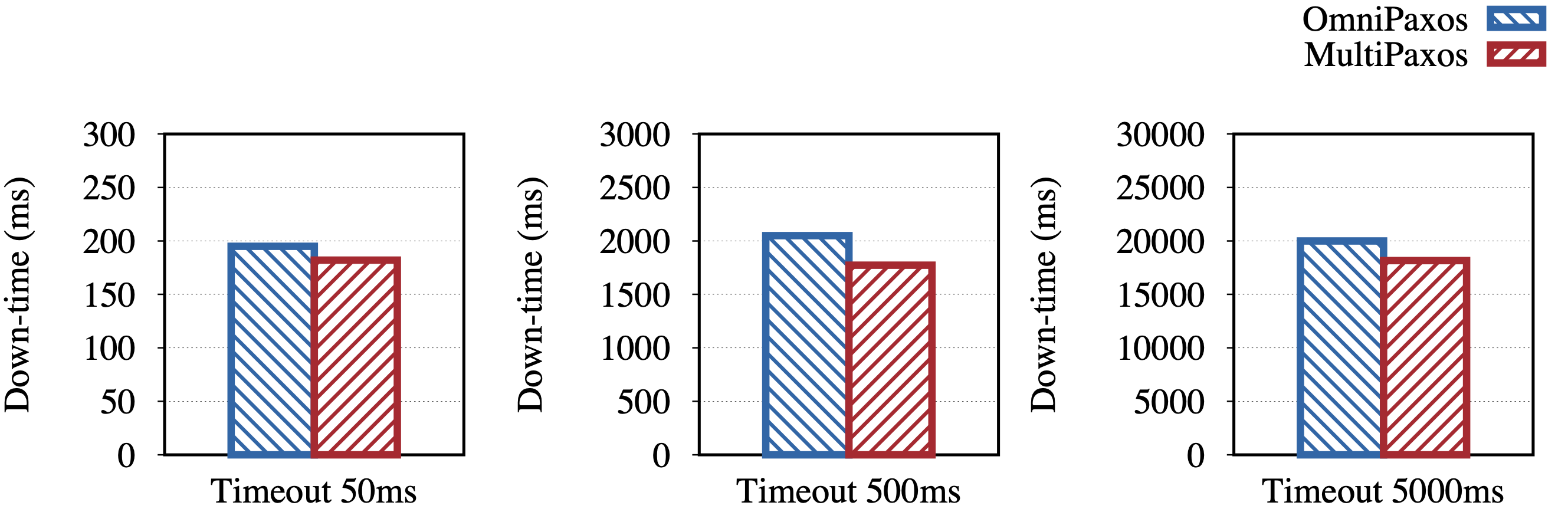

We evaluated our MultiPaxos implementation using the same experiment setting from the OmniPaxos paper. We deployed five MultiPaxos instances on AWS m5.2xlarge machines (8 cores and 32 GiB RAM). We repeated experiments with timeouts of 50, 500, and 5000 ms, respectively.

As shown in the figure above, it contradicts the claim that MultiPaxos would result in a deadlock in the quorum-loss scenario. Though we do not optimize MultiPaxos specifically for the partial network partition, the result shows that MultiPaxos exhibits even better downtime performance than OmniPaxos. Compared with OmniPaxos, MultiPaxos does not need to disseminate and relay the information of quorum connectivity, thereby reducing the number of messages exchanged among peers. Furthermore, MultiPaxos does not need extra policies for leader election, but it still can achieve similar performance without those implementation overheads.

This is a part of our ongoing work at Penn State, advised by my advisor Dr. Abutalib Aghayev and our collaborator Dr. Aleksey Charapko.